August 26, 2008, 2:42 pm

In the next few days, we’ll be at the following events in Berlin:

Drop us a line if you’ll be there as well and we can arrange a meeting!

August 25, 2008, 11:02 am

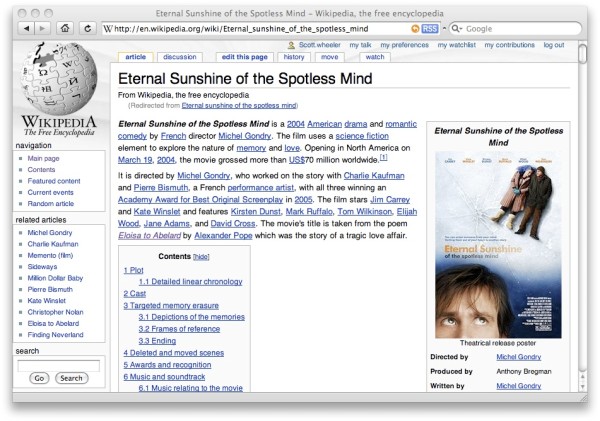

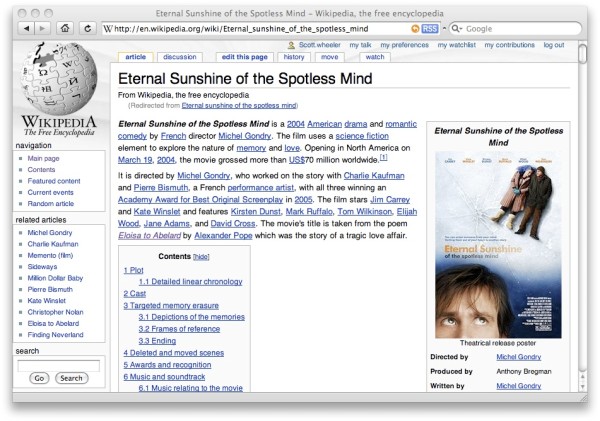

We’re very grateful that one of our users knocked out one of the items on our to-do list and created a Greasemonkey script for showing related articles on Wikipedia. Â If you have Greasemonkey installed in Firefox you can just click on “install script” on this page. Â To get related articles without being logged in to Wikipedia.

Per the comments on that page, we will start rolling out our Wikipedia demo in other languages probably about a week from now.

August 23, 2008, 8:38 pm

So, we think this is pretty cool beans. When we did our demo with a mashup of Wikipedia’s content we knew that we wanted something that potential customers could quickly look at and get a feel for what our recommendation engine is capable of, and we got a lot of good feedback about that in our recent technology preview.  On the other hand, we knew that we weren’t going to get the masses to switch over to user our Wikipedia interface.

One of the open questions for us as we pushed out the first bits of our web-services APIÂ last week was, “Can we get this content to show up in Wikipedia proper?”

Last night after an extended hacking session where I tried a number of strategies for doing DOM scripting to pull in external content (and some misadventures in trying to do cross-site XMLHttpRequests) I managed to come up with a simple way of pulling in content from our web service via JSONP, and added support for JSON output to our web service along the way. For Wikipedians that are logged in, it only requires adding one line to your monobook.js file and I’ve created a short how-to here.  The source code, for interested hackers is here.

Here’s what it looks like:

When we launched our demo a few people didn’t seem to get quite what it does that our engine is doing — we’re not just analyzing the current page and pulling in a few important links; we’re jumping out a few levels in the link structure and analyzing and ranking usually several thousand links in the neighborhood of the target page. Often those pages are linked from the target page, but that’s hardly a surprise. I come from a background of doing research in web-like search, so it’s no coincidence that our approach to finding related pages takes some hints from PageRank and other link-based approaches to sorting out the web.

We’d invite people to try this out and of course to keep playing with our mashup; we’ve gotten so used to having related pages that it’s hard to go back to the vanilla Wikipedia — having the related pages there makes it really easy to sort out things like, “What are the important related topics?” or “Well, I know about X, what are the main alternatives?” And so on. We’ve got some other exciting stuff up our collective sleeves that we’ll be rolling out in the next couple of weeks, so stay tuned!

August 16, 2008, 10:12 pm

Work on the web services API for the encyclopedia continues, now with tags. Â Here’s a quick rundown:

You can get a list of supported tags here:

http://pedia.directededge.com/api/v1/tags/

That currently returns:

<?xml version="1.0" encoding="UTF-8"?>

<directededge version="0.1">

<tag>actor</tag>

<tag>author</tag>

<tag>book</tag>

<tag>company</tag>

<tag>film</tag>

<tag>musician</tag>

</directededge>

You can then get results from article queries based on a tag, using something like this:

http://pedia.directededge.com/api/v1/article/KDE/tags/company/

Which returns:

<?xml version="1.0" encoding="UTF-8"?>

<directededge version="0.1">

<item id="KDE">

<link>Trolltech</link>

<link>Novell</link>

<link>Hewlett-Packard Company</link>

<link>Nokia</link>

<link>World Wide Web Consortium</link>

<link>Mandriva</link>

<link>Canonical Ltd.</link>

<link>Sirius Satellite Radio</link>

</item>

</directededge>

You can query any article for any tag (unlike in the web interface). Â Right now the results for “off topic” tags tend to be hit-or-miss. Â One of the other big items on our to-do list is improving tagged results in our engine.

I’m posting incremental updates like this in the hopes that if you’re planning on using our API in a mashup that you’ll let us know what you like and don’t like before we freeze v1.

We’ve also decided on a couple of limitations for the open API that aren’t true for our commercial API (running either on customer data sets or open data sets):

- You’re limited to 10 results.

- You can only filter on one tag at a time, meaning, you can’t get ranked results for movies and music simultaneously.

We think those are pretty reasonable and still give users a fair bit of room to play for free. Â If you’re interested in using our commercial API, drop us a line! Â We’ve also just created an announcement list where we’ll notify folks that are signed up of important details. Â You can sign up for that here.

August 15, 2008, 8:15 pm

This will still definitely be in flux, but I started getting parts of the REST API up if folks want to play with it. Â Warning: Â the format may change.

You can now hit something like:

http://pedia.directededge.com/api/v1/article/KDE

And get back:

<?xml version="1.0" encoding="UTF-8"?>

<directededge version="0.1">

<item id="KDE">

<link>GNOME</link>

<link>Unix-like</link>

<link>Desktop environment</link>

<link>Konqueror</link>

<link>Qt (toolkit)</link>

<link>KDE 4</link>

<link>GNU Lesser General Public License</link>

<link>X Window System</link>

<link>KPart</link>

<link>Widget toolkit</link>

</item>

</directededge>

I’ll be adding support for JSON output and filtering based on tags in the next few days. Â Once I’ve got a set of features there that I consider feature complete I’ll freeze the “v1” so that people can create mashups based on that and be sure that the API will remain stable.

This does do capitalization correction, but does not do redirect detection.  I’m debating if I want to do that by default or use another REST path since it requires another couple DB queries and is as such a little slower.

August 14, 2008, 5:04 pm

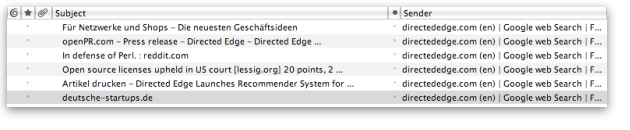

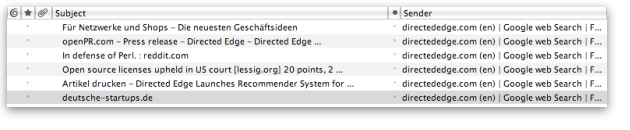

Like any new startup co-founder, I’m obsessive about seeing how what we’re doing trickles out over the web.  Being an RSS-warrior today I went looking for a Google search to RSS converter and found FeedMySearch, which now, a few hours into using it seems to do quite well in pulling in new information about Directed Edge as it hits Google’s indexes.

FeedMySearch for directededge.com in Thunderbird

Â

Nifty tool. Â Anything that stops me from compulsive reloading is a win. Â Now back to implementing new features. Â 🙂

August 13, 2008, 6:16 am

It’s an exciting day for us at Directed Edge. Today we’re finally putting our Wikipedia-based technology preview out there for people to play with. Before you click over to it, here’s a little about what you’re looking at.

As our name implies, we’re graph theory nerds. We look at the roughly 60 million links between the 2.5 million English Wikipedia pages, and with a few extra cues from the content, figure out pages related to the current one and put that in a little box in the upper left (as evident from the image on our home page). In some cases, if we’re able to pick out what sort of page it is, we also drop in a second box with just other pages of the same type.

Finding related pages in Wikipedia isn’t fundamentally what Directed Edge is about. We’ve got a super-fast in-house graph storage system that makes it possible to do interesting stuff with graphs quickly, notably figure out which pages are related. We’ve already got a couple of pilot customers lined up and will be working with a more in the next weeks to analyze their sites and figure out how things are related there. We’ve got a prototype of our web-services API that they’ll be using to send us break-downs of how stuff’s connected on their site and we’ll send back what we hope are some pretty groovy recommendations.

There are dozens of things in the pipe for us:Â ways to make recommendations better, ways to make the Wikipedia demo cooler, things customers want to see in our web services that we’d previously not thought of, and we could ramble on that for a while, but there are a few things that are on the very near horizon that didn’t quite make it into this round:

- An open web-services API for accessing the recommendations from our Wikipedia demo. This will be a stripped down, read-only version of our commercial API usable in web mash-ups.

- Better tagged (i.e. music, movies, authors, companies) recommendations. Support for tagged articles was one of the last features that made it into the current demo, and we’ve got some plans for improving the results for those.

- Pulling in non-English Wikipedia variants. We’ll probably start with German and French.

- More info about our commercial web-services API. Â We’re still nailing down some of the details, but as soon as we freeze the API for the first customers, we’ll add more docs to the site.

If you subscribe to our news feed you’ll see immediately when those services go live. Even though we’re still in the beta-phase and are only accepting a limited number of customers, if you think you’d be interested in using our engine for your site down the line, we’d encourage you to register now since we’ll be offering a discount for our commercial services to everyone who fills out their info in the contact form during the beta phase.

More soon. Enjoy!

August 7, 2008, 7:53 pm

We’ve now committed to going into public beta / technology preview next Wednesday, August 13th. Â We’ll be launching our new site with more information about our products and services at that time.

Press / bloggers may request invites by sending us a mail. Â It’ll be an exciting next few days as we iron out the last kinks and get ready for the onslaught.

The website will be a bit in flux, but our bio info is still available here.

Edit: If you’ve been testing the demo previously the location has changed. Â Drop us a line for the new URL.